How Machine Learning Will Revolutionize Hearing Aids

When you hear the term “machine learning” what’s the first thing that comes to mind? Do you think of computers making us redundant in the workplace? Do you think of armed robot uprisings?

)

If your initial reaction to machine learning is negative, you aren’t alone. Most people know very little about what machine learning really is, or how the science of machine learning benefits us as individuals on a daily basis. While there are many academic definitions out there, machine learning is – in essence – the science of pattern recognition and pattern prediction by computers.

Let’s take a real-world example. In my personal Google Photos library, I can search for the term “cat” and Google returns pictures I’ve taken of my cat (Niko).

)

But wait. I never told Google that there was a cat in these pictures.

How could Google possibly know about Niko? The answer, of course, is machine learning. Google has trained its software to recognize common objects and animals by exposing the software to millions of photos where the objects and animals have been identified. To get a better understanding of what Google “sees” in my images, I took the first photo of Niko and uploaded it to Google’s Vision API.

)

Google was 99% sure that Niko was a cat…

… and amusingly, a little less certain that Niko was a mammal (we’re talking about machine learning here, not true human intelligence). Aside from that inconsistency, Google did a pretty good job of identifying the patterns in the image. To accomplish this feat, Google’s pattern recognition engine has been trained on millions of images of cats, mammals, whiskers, fur, etc. Note: We always thought Niko was a Tabby, but after looking at some pictures of “Dragon Li” breed cats, we’re reconsidering.

Aside from recognizing your cat photos, what are other real-world applications of machine learning that might impact your life?

Real-world applications of machine learning:

- Google search

- Recommendation engines on services like Netflix and Spotify

- “Fastest route” suggestions on Google Maps

- Providing ETA estimates on ridesharing apps like Uber

- Providing delivery estimates on food delivery apps like UberEATS

- Fraud detection in payment systems (like PayPal) and finance

- Medical diagnosis in clinically-complex cases

- Drug prescription assistants

- Identifying tumors and skin cancer

- Spam filtering on popular email clients like Gmail

- Speech recognition (Google, Alexa, Siri, etc)

- Self-driving cars

- Facial recognition used by Facebook and to detect criminals, etc

- Robots that help care for the elderly

- Automatic closed captioning for spoken word and sign language

- Hearing aid performance optimization (Added to the list as of 2018)

Machine Learning and Hearing Aids

How does the science of machine learning offer to improve hearing aids? Researchers in the field have offered a few concrete goals:

- Better hearing in background noise

- Improved sound quality

- Greater listening comfort

Better hearing in background noise

As any experienced hearing aid user knows, hearing in background noise is extremely difficult. Solving the background noise problem is the elusive holy grail of hearing aid technology, and while there have been a number of technological innovations since the dawn of digital hearing aids (like the directional microphone, and beamforming directionality), only incremental progress has been made in providing a solution.

Over the past few years, DeLiang Wang – a researcher out of Ohio State University – has been working on using machine learning and “deep neural networks” to help make it easier to hear a conversational partner in background noise. Wang’s software enables listeners (with normal hearing and hearing loss) to hear significantly better in background noise.

People in both groups showed a big improvement in their ability to comprehend sentences amid noise after the sentences were processed through our program. People with hearing impairment could decipher only 29 percent of words muddled by babble without the program, but they understood 84 percent after the processing.

Incorporating Wang’s software into a hearing aid would almost certainly revolutionize hearing aid technology, but unfortunately the software is not on the market yet. Based on the following statement, we can safely assume that there will be a significant wait before this technology will be available to consumers.

Eventually, we believe the program could be trained on powerful computers and embedded directly into a hearing aid, or paired with a smartphone via a wireless link, such as Bluetooth, to feed the processed signal in real time to an earpiece.

Improved Sound Quality and Greater Listening Comfort

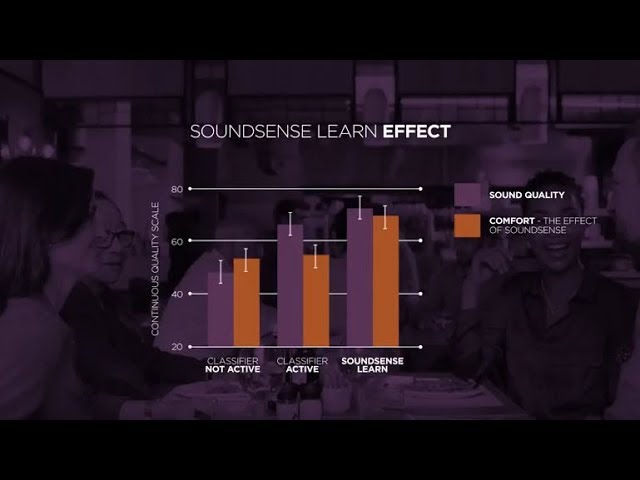

While the solution to background noise is still over the horizon, real progress has been made on improving sound quality and listening comfort through machine learning. The results of a recent double-blind study suggest that machine-learning can assist hearing aid users in more effectively finding their ideal sound settings; sound settings that lead to greater sound quality and listening comfort in a variety of difficult listening settings.

SoundSense Learn for Widex EVOKE

The good news? This technology has already hit the market, and is available globally right now. The technology is dubbed “SoundSense Learn”, and it’s available exclusively on the Widex EVOKE™ hearing aid platform. Here’s a description from Widex (we’ll try to break it down in simple terms below):

SoundSense Learn is an implementation of a well-researched machine learning approach for individual adjustment of hearing aid parameters. SoundSense Learn is designed to individualize hearing aid parameters in a qualified manner, sampling enough possible settings to adjust the hearing aid according to the users’ preference for his/her auditory intention with a high degree of certainty. From a practical perspective, this means the user is given control of a selected set of parameters using an application (app) that minimizes the number of interactions needed to reach an optimal setting.

What does all this mean for you?

How will you use Widex’s machine learning technology, and how will it help you? Essentially, it boils down to optimizing your hearing aid settings using the EVOKE™ companion app. Using the app, Widex EVOKE™ hearing aids can be optimized for any number of unique sound settings using A/B comparisons. Let’s say you’re in your favorite pizza restaurant, and you find the sound overwhelming and unpleasant. To improve the sound quality, and make the sound more comfortable, you grab your phone and launch the EVOKE™ app, where you’re presented with two sound options (A or B). You listen briefly to both options and then select which of the two sound profiles you prefer.

If this all seems overly simplistic and low-tech it’s because it was designed to be that way. The magic of Widex’s machine learning approach is taking the complexity of hearing aid sound optimization and packaging it in an approachable, user-friendly app that almost anyone can understand and operate. By giving the user a very straightforward task (choose A or B) that adjusts multiple hearing aid settings in a meaningful way (to provide sound optimization), Widex has hit a home-run for users who wish to have more control over their individualized sound settings. And it gets better. Over time, EVOKE™ gets to know your listening preferences, and through machine-learning, can predict the right sound settings without you needing to do anything at all.

While this video is not necessarily targeted at end-users, it does a great job explaining how the technology is used, and how it benefits users:

Closed captions are available on this video. If you are using a mobile phone, please enable captions clicking on the gear icon.

What about the cat photos?

So how does this all relate to Google Photos and pictures of cats? When Google’s Vision API looks at a picture, it may as well be looking at a bunch of noise; randomized colored pixels with no meaning whatsoever. Google’s machine-learning technology takes that visual noise, and gives it meaning, and it does this by deploying sophisticated pattern recognition techniques that detect the outline of a cat, markings of a Tabby (or Dragon Li), etc.

When your Widex EVOKE™ hearing aids are presented with the acoustic noise of a crowded restaurant, they begin to undertake a similar process. What is the noise? What patterns are present in the noise, and what type of sound setting is this? Once the patterns have been recognized by the hearing aid software, the sound settings of the hearing aids adjust to provide optimal sound quality and listening comfort – all based on machine learning, and what you have taught the EVOKE™ app about your listening preferences.

The future of machine-learning hearing aids?

Hearing aids are now capable of tapping into sophisticated machine-learning software by connecting wirelessly to our smartphones. Through Widex EVOKE™, we have now seen the first real-world application of such technology; a technology that can provide tangible benefits to real hearing aid users.

Listed prices are for a pair of hearing aids in US dollars unless otherwise specified. Prices may change over time, and may vary by region.

What’s next for machine-learning hearing aids?

We don’t know for sure, but we probably won’t see the next real advance for hearing aid users until machine-learning computations can be performed on the hearing aid itself (and that could take a long while). Once hearing aids are capable super-computer computations – without the assistance of smartphones – we should be getting very close to a real-time speech-in-noise parser; a technology that should help you to finally hear better in background noise.

)

No one has commented on this page yet. We'd love to hear from you!