Live Caption on Google's Android Q is a Killer App

I've been a reasonably happy citizen of the Apple universe ever since I purchased my first Mac Classic in 1988. And when Apple introduced the iPhone, I was all in. I never seriously thought about trading mine in for an Android smartphone—until now. That’s because Google just announced that its new Android Q OS will come with a Live Caption application and other accessibility features.

A Google video shows how Live Caption helps people with hearing loss navigate the world of digital audio and video. Closed captions are available on this video. If you are using a mobile phone, please enable captions clicking on the gear icon.

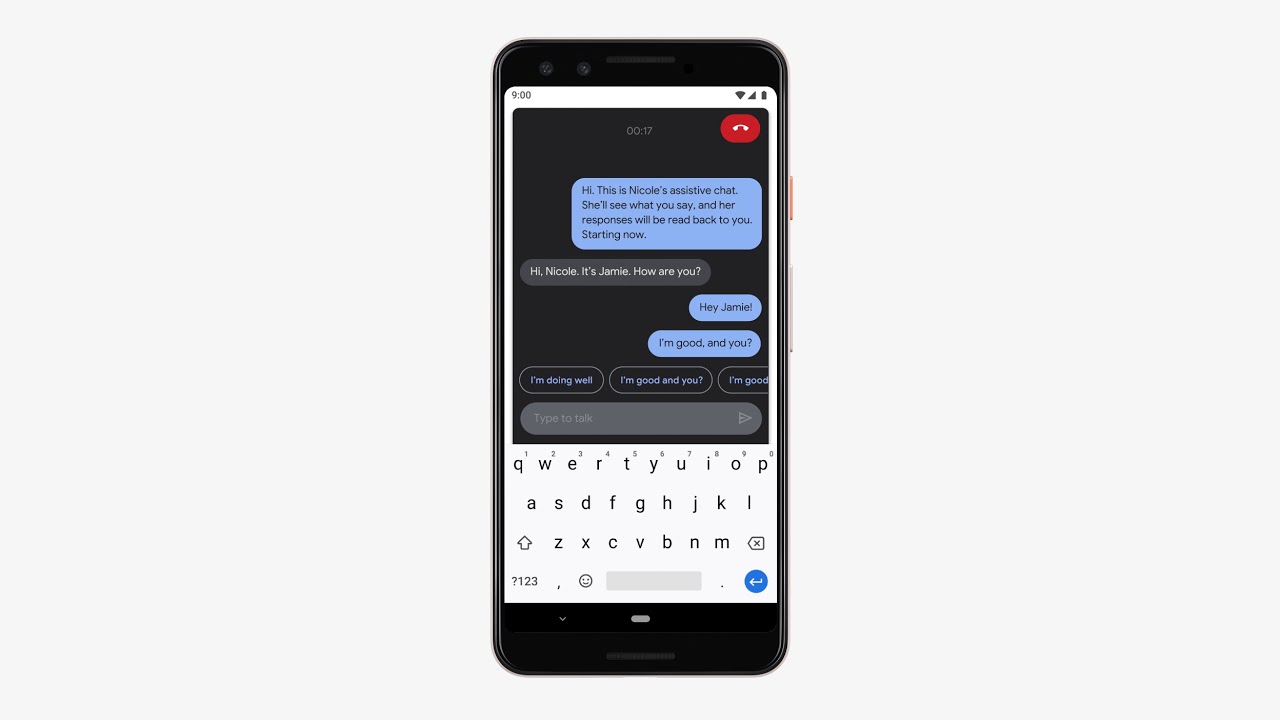

Live Caption creates instant, real-time transcriptions for videos, podcasts, and audio messages for any app running on Android Q. As soon as speech is detected, captions appear, even if the app has no native built-in support for captioning. It even captions video and audio recorded by the user.

If Live Caption works anywhere near as well as the demonstration yesterday at the annual Google I/O developer conference, it might just be the kind of killer app that will entice diehard iPhone users like me to consider making the switch.

As Google said in a blog post about the Android Q:

For 466 million deaf and hard of hearing people around the world, captions are more than a convenience—they make content more accessible. We worked closely with the Deaf community to develop a feature that would improve access to digital media. With a single tap, Live Caption will automatically caption media that’s playing audio on your phone. Live Caption works with videos, podcasts and audio messages, across any app—even stuff you record yourself. As soon as speech is detected, captions will appear, without ever needing Wifi or cell phone data, and without any audio or captions leaving your phone.

Google Android Q Announcement

Captions wherever and whenever you need them

With my two cochlear implants, I do very well in live conversations and, for the most part, on the phone. But I still have a lot of trouble understanding speech on TV. And audio recordings and web videos played on the computer and phone can be even more problematic. I enable closed-captions regularly when watching TV, but for other kinds of media, there’s often no adequate solution.

When I do view videos on my phone or computer, I don't like to turn the volume up too loud for fear of bothering others. And devices that have substandard speakers can make speech near-unintelligible even for many people with good hearing.

Live Caption, along with other applications enabled by Google's new Live Transcribe technology, promises to change all that with captions wherever and whenever you need them.

Google CEO Sundar Pichai demonstrates Live Caption in his conference keynote address (46:50 on the video). Closed captions are available on this video. If you are using a mobile phone, please enable captions clicking on the gear icon.

Breakthroughs in machine learning enable Live Caption and Live Relay

Live Caption converts speech to text in real-time. Utilizing breakthroughs in machine learning, it provides immediate transcriptions of YouTube videos, podcasts, video chats, Skype calls, and audio and video recordings created by the user. All processing takes place on the user’s Android phone.

Live Caption works in the background without interrupting other apps. And because it doesn't need to send data to the cloud for processing, it eliminates delays from sometimes spotty wireless connections.

For regular phone calls, there is also a new Live Relay feature. It’s a lifesaver for people who are deaf or have severe hearing loss, and for those who have trouble being understood on the phone.

Live Relay allows the phone to listen and speak for users while they type. Because the responses are instant and provide real-time writing suggestions, users can type fast enough to conduct a normal phone call.

Google’s Live Relay converts typing to speech and speech to text to enable phone calls without talking or listening. Closed captions are available on this video. If you are using a mobile phone, please enable captions clicking on the gear icon.

Live Transcribe: technology for multiple applications

Live Caption and Live Relay are practical applications that utilize the Live Transcribe technology Google announced in February. A team led by Dimitri Kanevsky, a deaf Google researcher, collaborated with deaf students and faculty at Gaulladet University to develop the new real-time voice-to-text transcription technology.

Live Transcribe is an artificial-intelligence-based technology that uses machine learning to constantly improve speech to text conversion. It's being integrated into apps for personal computers and mobile phones and supports external microphones in wired headsets, Bluetooth headsets, and USB mics.

It will be available in up to 70 languages and dialects. In addition to the new Android Q OS, Google says it will be available for all smartphones running the Android 5.0 operating system and later.

Google's Project Euphonia assists people with speech impediments

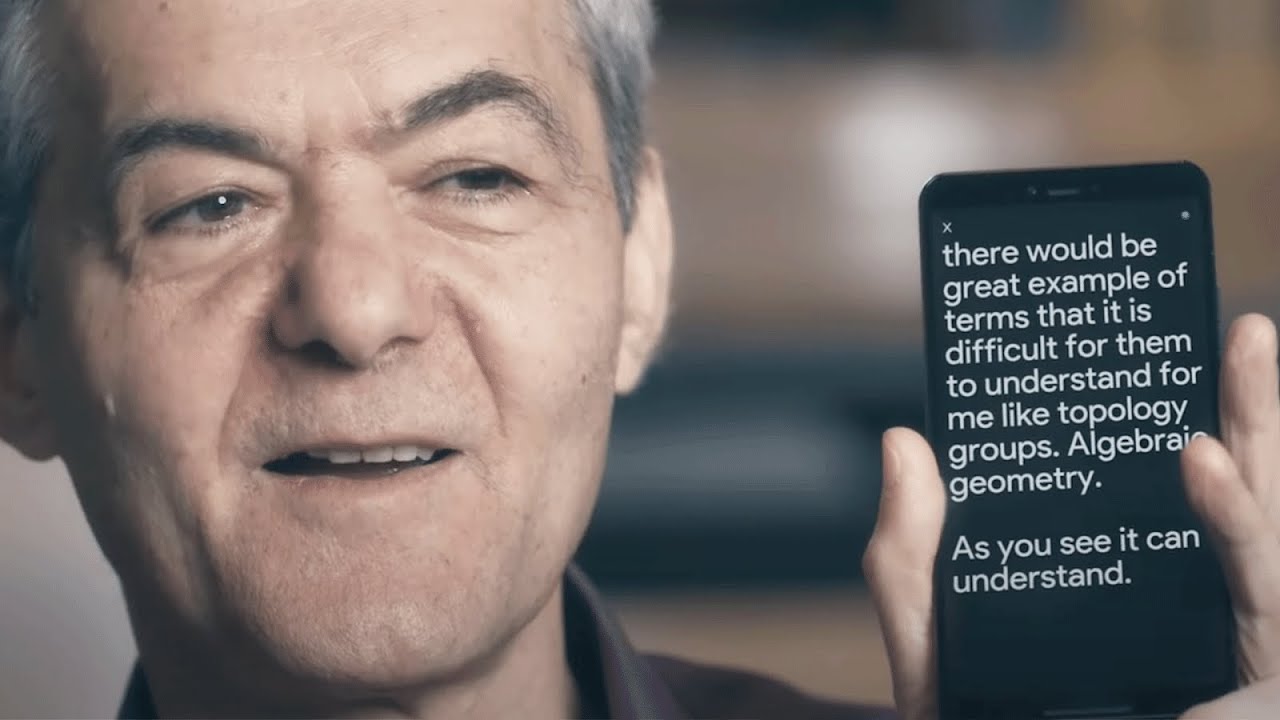

In addition to aiding people with hearing loss, Google said it will introduce technology to help people with speech impediments to communicate more clearly. Its Project Euphonia uses artificial intelligence to improve computers’ abilities to understand diverse speech patterns, such as impaired speech.

Google’s software turns recorded voice samples into a spectrogram, or a visual representation of sound. The computer then uses common transcribed spectrograms to "train" the system to better recognize this less common type of speech.

Google partnered with the non-profit organizations ALS Therapy Development Institute (ALS TDI) and ALS Residence Initiative (ALSRI) to record the voices of people who have ALS, a neuro-degenerative condition that can result in the inability to speak and move.

After learning about the communication needs of people with ALS, Google optimized AI-based algorithms so that mobile phones and computers can more reliably transcribe words spoken by people with speech difficulties.

Google scientists explain how Project Euphonia will improve accessibility and communications for people with speech impediments. Closed captions are available on this video. If you are using a mobile phone, please enable captions clicking on the gear icon.

Falling in love again

Don’t get me wrong. I still love my iPhone, my iPad, my MacBook Pro, my iMac with its Retina display, and all other things iOS and MacOS. I even hung onto my vintage Mac Classic.

But I’m afraid that with Google’s Live Caption, I just might end up falling even a little more in love with the brave new accessible world of Android.

Update: Pixel 4 launched with Live Caption

Today, Google launched both Live Caption and the new Pixel 4 and Pixel 4 XL smartphones. Check out this new video showing Live Caption on the Pixel 4.

)

I have been using the app when I attend school board and county meetings. It works great!

TF