How Artificial Intelligence Could Completely Restore Hearing

While scientists are racing to solve the hearing loss epidemic through biotech, advances are being made in the fields of AI and machine learning that may present alternative options for those that want to regain their hearing—and don’t mind becoming a cyborg in the process.

Background noise is the enemy

For most people with hearing loss, background noise is the single biggest problem. And even with fancy tech like hyperdirectional beamforming microphone arrays, modern hearing aids are very limited in their ability to remove noise from a conversation. While, there are some apps that have made progress on background noise removal, and Apple and Bose are jumping into the hearing tech space, we are still a long way off from 100% noise removal—especially for more complex noise, like the chatter of a noisy restaurant.

Lip-reading AI could solve the noise issue

Technology has advanced rapidly in the past few years in the field of AI-based lipreading. Sitting at the nexus of computer vision, artificial intelligence, and speech sciences, AI-lipreading is the process by which a computer “watches” a video of a person speaking—without sound—and spits out an audio file of the person’s audible speech at the end. Still confused? Check out the video below for a full explanation of how this technology works.

Video courtesy Two Minute Papers. Fast forward to 2:30 for speech samples. Closed captions are available on this video. If you are using a mobile phone, please enable captions clicking on the gear icon.

Clean speech, finally

As you can see from watching the video above, computers are now able to create extremely clean speech based on computer vision alone. I cannot overstate the significance of this from the perspective of people suffering from hearing loss. If a computer is able to focus on a single person in a crowd, and reproduce their voice without the requirement of removing background noise, this technology effectively leapfrogs the entire science of noise removal, and offers hearing loss sufferers a tangible reason to be optimistic about the future.

Focusing on the right person

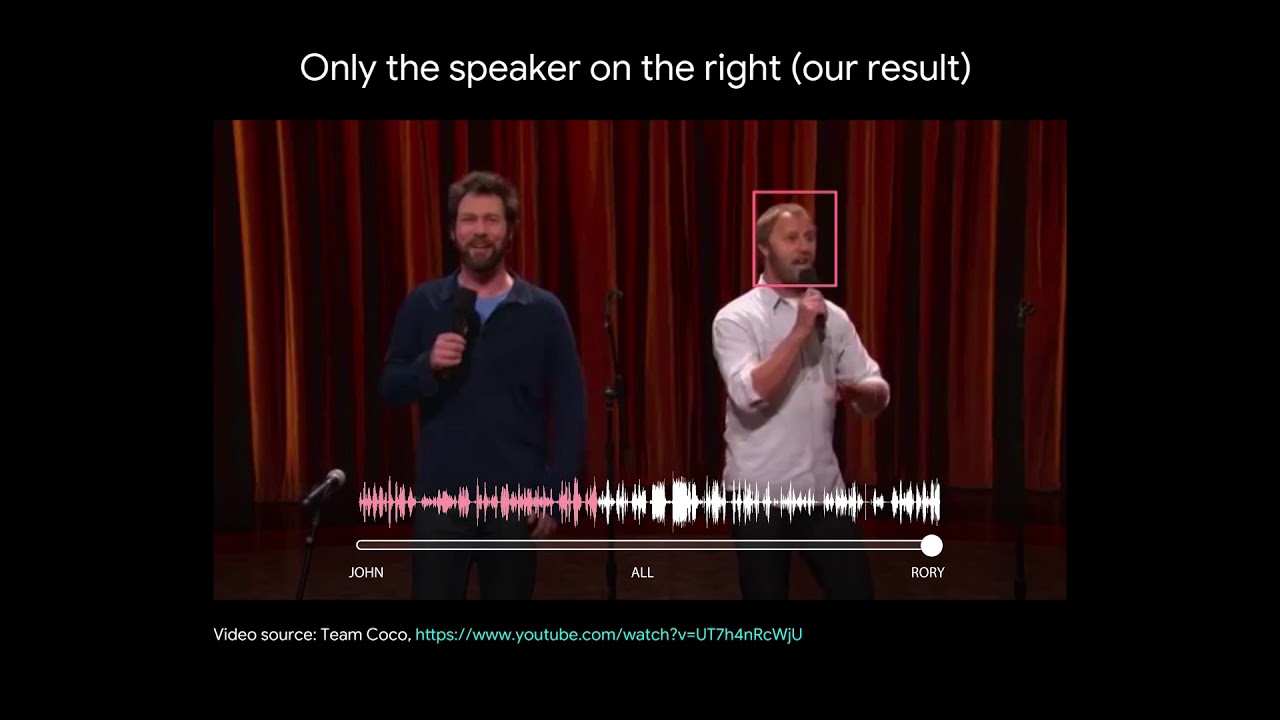

Back in 2018, Google AI announced their progress on “Looking to Listen”, a technology that allows listening to a specific person in a video scene, even when multiple people in the scene are speaking simultaneously. Google used a clever audio and visual feature detection model to deliver extremely clean audio based on the single person you want to isolate in the video. An example of this is seen in the video below.

As you can see in this 2-year-old video, the technology is already available for audio-focusing on a single person via computer vision. While Google relied on audio and video features to reproduce speech, modern approaches (discussed in the video above) have gone a step further and eliminated the need for audio features entirely. This means it should be possible to reproduce high-quality audio-focused speech regardless of the whether the speaker is completely drowned out by loud, complex background noise.

Real world application?

So how would this tech work in the real world? Would you need to lug around a camera, computer, and headphones, and click on the people you want to listen to? And can something like this even work in real time? Currently, there really is no way to implement an AI-based lipreading solution in the real time, with significant computer processing time needed to reproduce missing speech.

And who wants to lug around all that equipment anyway? Well, remember Google Glass? Head mounted camera and heads-up augmented reality display? I know it was a privacy nightmare, and I know everyone hated it, but maybe we could all make an exception for people with hearing loss?

)

Modern Google Glass sports a Qualcomm Quad Core, 1.7GHz processor and 8MP head-mounted camera.

I could imagine the Google Glass becoming relevant in a new world of computer-vision assisted speech focusing. AI tech would need to advance from its current state to enable real-time speech focusing, but I could see Google Glass (or similar) wearers simply looking at the person they want to hear, and AI doing the rest.

Delivering clean speech to the ear and beyond

After the heavy lifting is done, how does cleaned up sound get delivered to the ear? For people with normal hearing, this should be easy. You could use the onboard speaker on Google Glass (or competing product) to get a speech boost in background noise. Or you could use any number of wireless earbuds to deliver the cleaned audio to your ears.

For those with hearing loss, the situation is a little more complicated. For those with mild to moderate hearing loss, wireless buds, or a pair of Bluetooth hearing aids, should do the trick just fine. But for those suffering from profound sensorineural hearing loss, pristine sound won’t make it through the distorting effects of a deteriorated inner ear, no matter how cleanly it is delivered. Many with profound hearing loss struggle to hear loud, clear speech, even during one-on-one conversations.

So how do we get past a deteriorated ear, to deliver clean sound to those with profound hearing loss? Well, aside from implanting an electrode array into the inner ear via a cochlear implant, or implanting the brainstem, there currently aren’t a lot of options. However, thanks to Elon Musk, we now have reason to believe a miracle may be on the way. Musk dropped this bombshell last week, which has everyone in the hearing loss world giddy with excitement:

Yes

— Elon Musk 🌹 (@elonmusk) July 19, 2020

Yes, this is where things get really crazy, and start to sound more like sci-fi than reality. But, Musk has put together fantastic team of biotech innovators, and has created a brain-machine interface with over 3,000 electrodes that target specific brain regions. Musk thinks Neuralink will eventually transmit music to the brain directly. And if that turns out to be true, Neuralink should be able to deliver clean human speech to the brain as well.

Video courtesy Real Engineering. Closed captions are available on this video. If you are using a mobile phone, please enable captions clicking on the gear icon.

How soon can we expect all of this?

Unfortunately, I don’t have the answer. I am encouraged by the rapid advancement of computer vision solutions to speech reproduction, and the great potential of Neuralink for delivering audio directly to the brain. I will be watching this space closely, and providing updates to our readers as I learn more.

/f/45415/1280x720/a21f77b482/maxresdefault-1.jpeg)

/f/45415/1280x720/a21f77b482/maxresdefault-1.jpeg 1280w)

)

No one has commented on this page yet. We'd love to hear from you!